regularization machine learning mastery

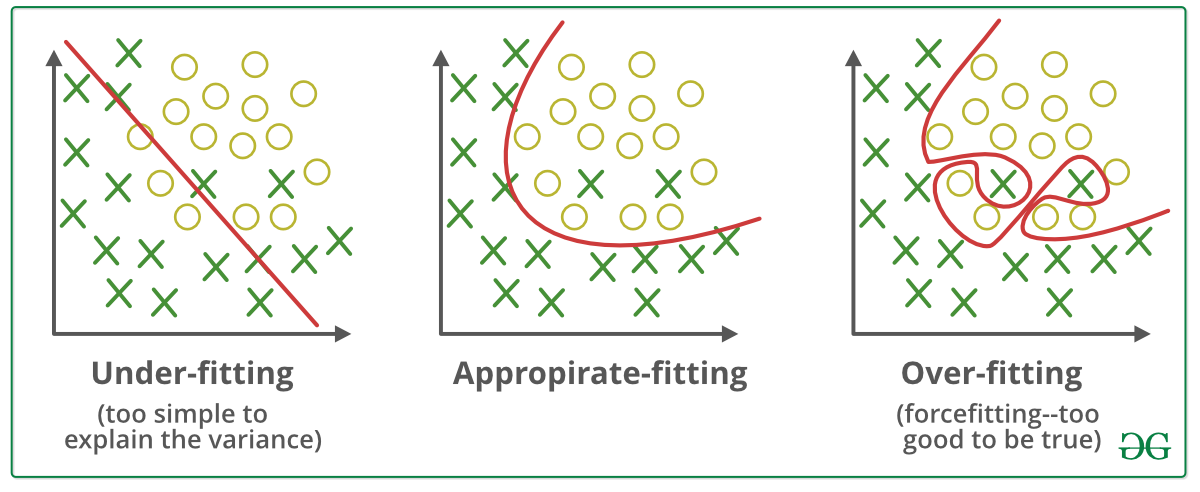

Optimization function Loss Regularization term. Regularization is a set of techniques that can prevent overfitting in neural networks and thus improve the accuracy of a Deep Learning model when facing completely new data from the problem domain.

Regularization In Machine Learning Regularization Example Machine Learning Tutorial Simplilearn Youtube

You can refer to this playlist on Youtube for any queries regarding the math behind the concepts in Machine Learning.

. The effect of regularization on regression using normal equation can be seen in the following plot for regression of order 10. Regularization is one of the basic and most important concept in the world of Machine Learning. Welcome to Machine Learning Mastery.

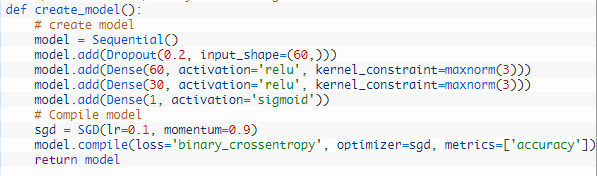

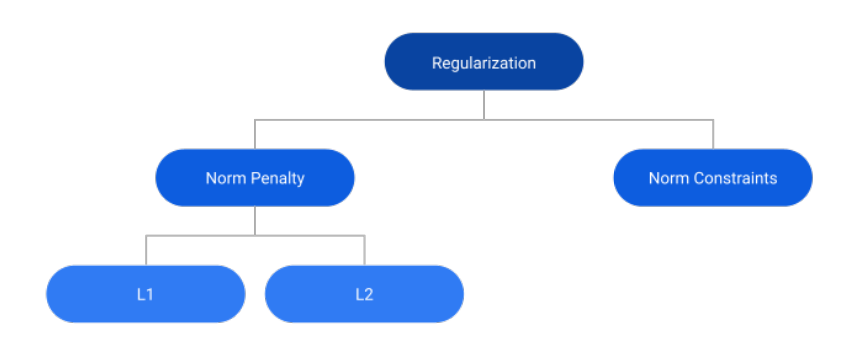

In simple words regularization discourages learning a more complex or flexible model to. The commonly used regularization techniques are. In this post you will discover the dropout regularization technique and how to apply it to your models in Python with Keras.

Regularization in Machine Learning What is Regularization. L2 regularization or Ridge Regression. A single model can be used to simulate having a large number of different.

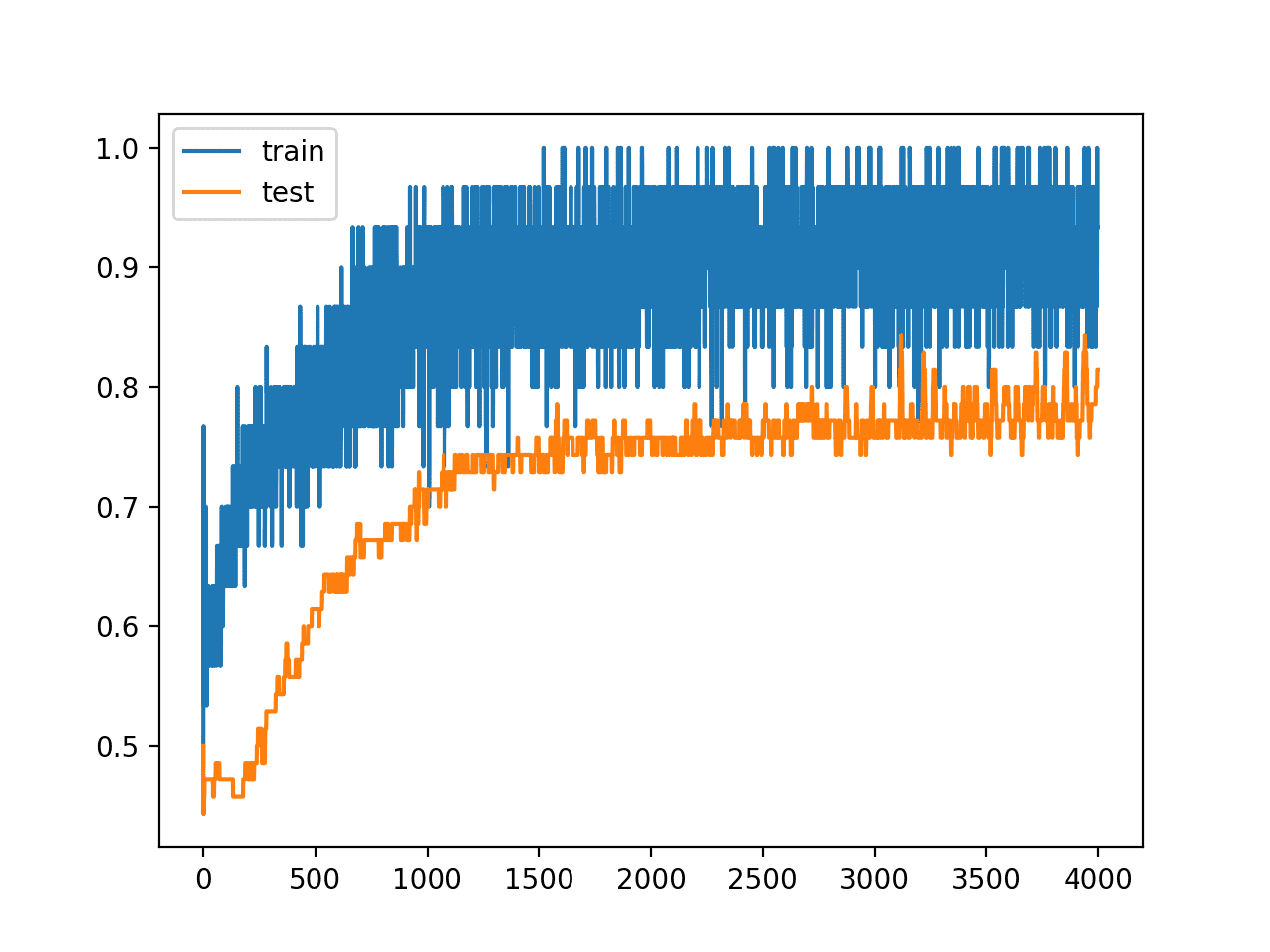

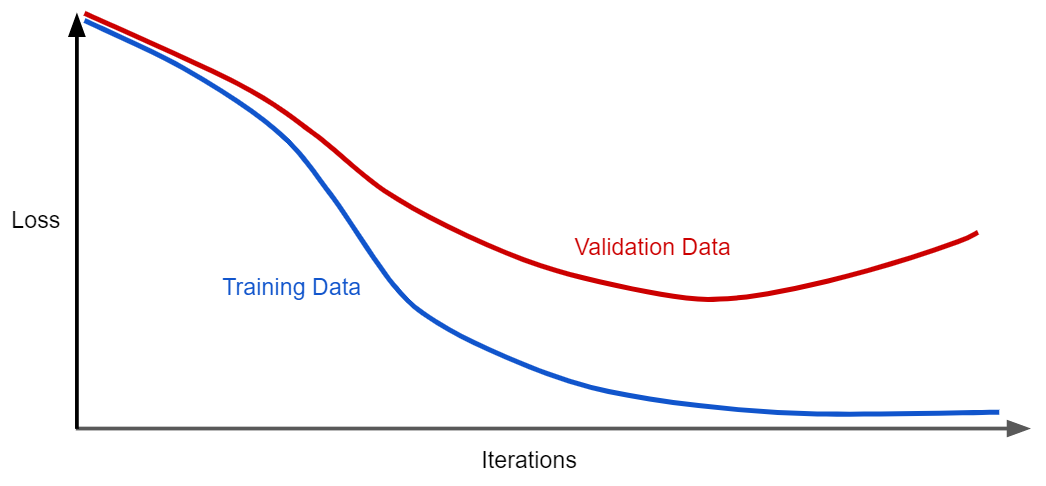

There are multiple types of weight regularization such as L1 and L2 vector norms and each requires a hyperparameter that must be configured. Sometimes the machine learning model performs well with the training data but does not perform well with the test data. In order to create less complex parsimonious model when you have a large number of features in your dataset some.

How to use dropout on your input layers. This technique prevents the model from overfitting by adding extra information to it. In the context of machine learning regularization is the process which regularizes or shrinks the coefficients towards zero.

So the systems are programmed to learn and improve from experience automatically. L1 regularization or Lasso Regression. No of hidden units 2Norm Penalties.

In this post lets go over some of the regularization techniques widely used and the key difference between those. An important concept in Machine Learning. This is a form of regression that constrains regularizes or shrinks the coefficient estimates towards zero.

It means the model is not able to. A simple and powerful regularization technique for neural networks and deep learning models is dropout. In other words this technique discourages learning a more complex or flexible model so as to avoid the risk of overfitting.

Discover how to get better results faster. Also it enhances the performance of models for new inputs. In this post you will discover activation regularization as a technique to improve the generalization of learned features in neural networks.

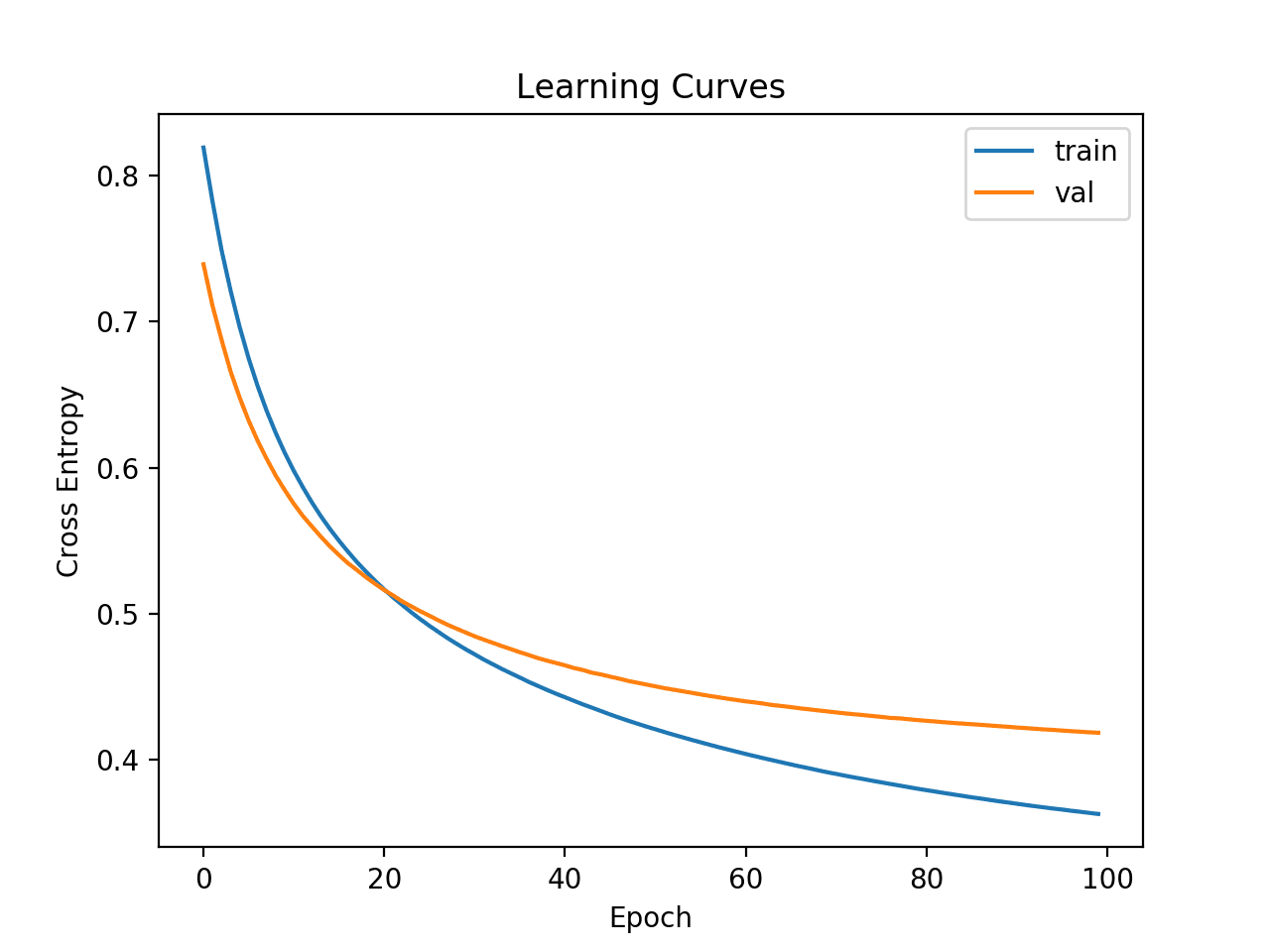

Hi Im Jason Brownlee PhD and I help developers like you skip years ahead. This is exactly why we use it for applied machine learning. In this section we will demonstrate how to use dropout regularization to reduce overfitting of an MLP on a simple binary classification problem.

In general regularization means to make things regular or acceptable. A simple relation for linear regression looks like this. In my last post I covered the introduction to Regularization in supervised learning models.

Weight regularization provides an approach to reduce the overfitting of a deep learning neural network model on the training data and improve the performance of the model on new data such as the holdout test set. Regularization is one of the most important concepts of machine learning. If the model is Logistic Regression then the loss is.

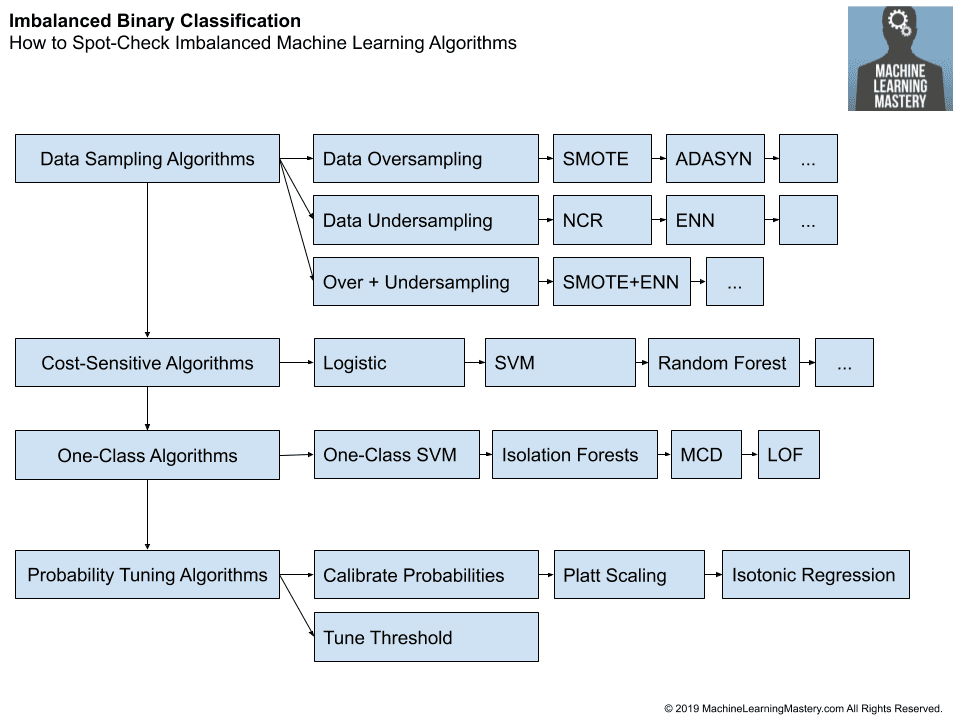

It is one of the most important concepts of machine learning. And access to my exclusive email course. The most common ways to reduce overfitting is through Regularization methods of.

It is a technique to prevent the model from overfitting by adding extra information to it. Dropout Regularization Case Study. This example provides a template for applying dropout regularization to your own neural network for classification and regression problems.

After reading this post you will know. Concept of regularization. Deep learning neural networks are likely to quickly overfit a training dataset with few examples.

I have tried my best to incorporate all the Whys and Hows. Overfitting is a phenomenon that occurs when a Machine Learning model is constraint to training set and not able to perform well on unseen data. Regularization is a technique used to reduce the errors by fitting the function appropriately on the given training set and avoid overfitting.

Hello reader This blogpost will deal with the profound understanding of the regularization techniques. Equation of general learning model. Regularized cost function and Gradient Descent.

Ensembles of neural networks with different model configurations are known to reduce overfitting but require the additional computational expense of training and maintaining multiple models. L2-and L1-regularization 3Early stopping. Activity or representation regularization provides a technique to encourage the learned representations the output or activation of the hidden layer or layers of the network to stay small and sparse.

How the dropout regularization technique works. Machine learning involves equipping computers to perform specific tasks without explicit instructions. Machine Learning Srihari Topics in Neural Net Regularization Definition of regularization Methods 1Limiting capacity.

Click the button below to get my free EBook and accelerate your next project. Let us understand this concept in detail. Regularization is the most used technique to penalize complex models in machine learning it is deployed for reducing overfitting or contracting generalization errors by putting network weights small.

It is a form of regression that shrinks the coefficient estimates towards zero. Data scientists typically use regularization in machine learning to tune their models in the training process. In other words this technique forces us not to learn a more complex or flexible model to avoid the problem of.

Coursera - Regularized Linear Regression. For Deep Learning the issue of overfitting since the model is able to adapt freely to large amount of complex data. Using cross-validation to determine the regularization coefficient.

No implementation of regularized normal equation presented as it is very straight forward. In this article we will address the most popular regularization techniques which are called L1 L2 and dropout.

Linear Regression For Machine Learning

Issue 4 Out Of The Box Ai Ready The Ai Verticalization Revue

A Gentle Introduction To Dropout For Regularizing Deep Neural Networks

![]()

Start Here With Machine Learning

Machine Learning Mastery With Weka Pdf Machine Learning Statistical Classification

Machine Learning How To Prevent Overfitting By Ken Hoffman The Startup Medium

A Gentle Introduction To Dropout For Regularizing Deep Neural Networks

A Tour Of Machine Learning Algorithms

Regularisation Techniques In Machine Learning And Deep Learning By Saurabh Singh Analytics Vidhya Medium

What Is Regularization In Machine Learning

A Gentle Introduction To Dropout For Regularizing Deep Neural Networks

![]()

Machine Learning Mastery Workshop Enthought Inc

Regularization In Machine Learning And Deep Learning By Amod Kolwalkar Analytics Vidhya Medium

Regularization In Deep Learning 1 By Siya Medium

Regularization In Deep Learning 1 By Siya Medium

Iot Data Is Not The New Currency Information Is And We Need To Know How To Get It